-

Visualizing Elasticsearch Function Scores

Open Pen in new window / View code as Gist One of the most common questions we get from our WordPress VIP clients, many of whom are large media companies that publish constantly, is how they can bias their search results towards more recent content when scoring and sorting them. This type of problem is…

-

Balancing Kafka on JBOD

At Automattic we run a diverse array of systems and as with many companies Kafka is the glue that ties them together; letting us to shuffle data back and forth. Our experience with Kafka have thus far been fantastic, it’s stable, provides excellent throughput, and the simple API makes it trivial to hook any of our systems up to it.…

-

Log Analysis With Hive

At Automattic we see over 131M unique visitors per month from the US alone. As part of the data team we are responsible for taking in the stream of Nginx logs and turning them into counts of views and unique visitors per day, week, and month on both a per blog and global basis. To…

-

Building a Faster ETL Pipeline with Flume, Kafka, and Hive

Building a Faster ETL Pipeline with Flume, Kafka, and Hive

-

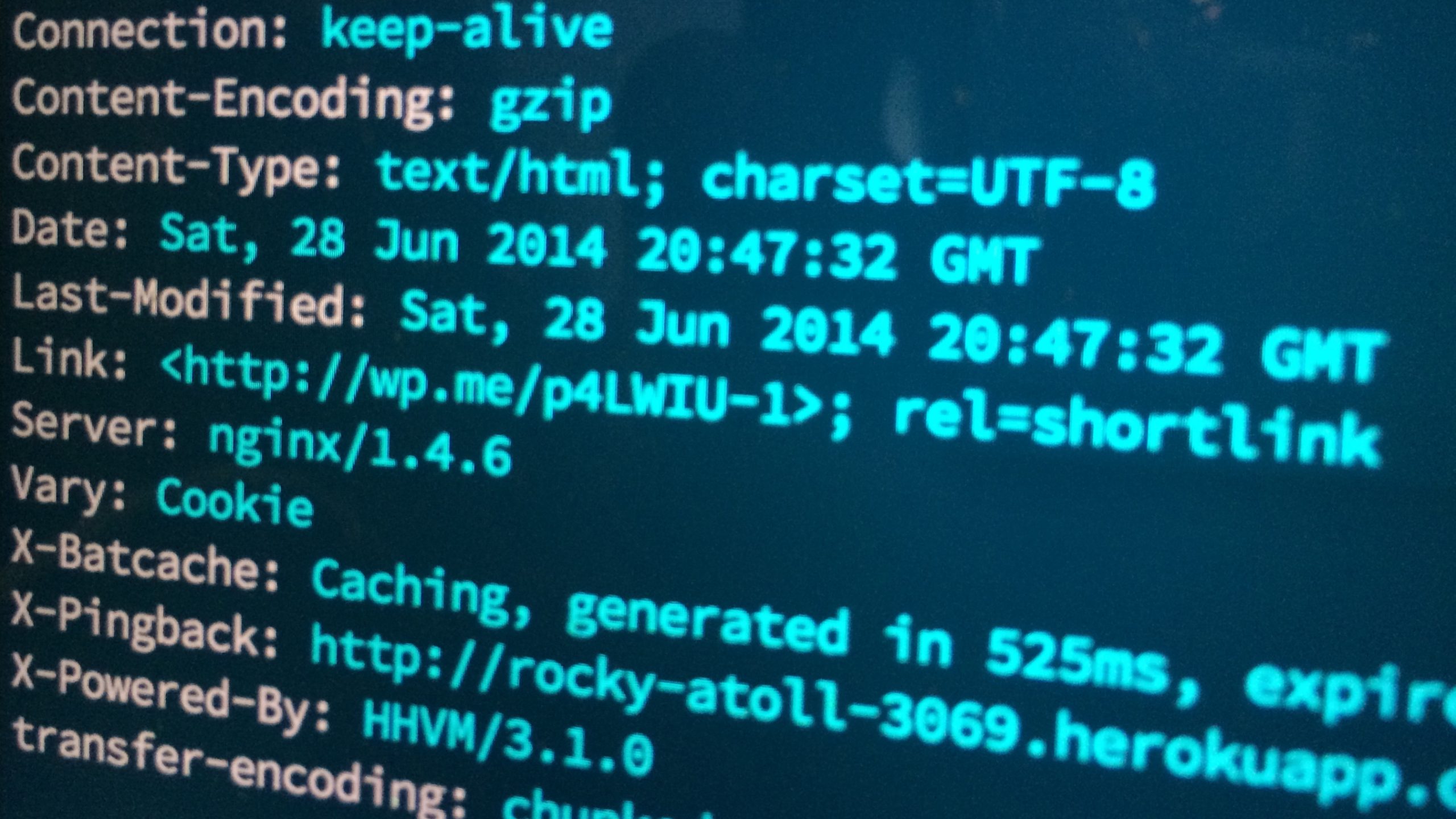

WordPress Performance with HHVM

With Heroku-WP I hoped to lower the bar in getting WordPress up and running on a more modern tech stack. But what are the performance implications of running WordPress on such a modern set of technologies? Surely it’s faster but by how much and is the performance gains worth the trouble? To answer that I’ve…

-

Elasticsearch StatsD Plugin

If you’re running a multi-node Elasticsearch cluster checkout Automattic’s fork of the Elasticsearch StatsD Plugin for pushing cluster and node metrics to StatsD.

-

WordPress on NGINX + HHVM with Heroku Buildpacks

It’s been a year since I last made any major changes to my WordPress on Heroku build and in tech years that’s a lifetime. Since then Heroku has released a new PHP buildpack with nginx and HHVM built in. Much progress have also been made both HHVM and WordPress to make both compatible with each…

-

Introducing Whatson, an Elasticsearch Consulting Detective

Over the past few months I’ve been working with the Elasticsearch cluster at Automattic. While we monitor longititudinal statics on the cluster through Munin when something is amiss there’s currently not a good place to take a look and drill down to see what the issue is. I use various Elasticsearch plugins however they all…

-

Static Asset Caching Using Apache on Heroku

There’s been many articles written about how to properly implement static asset caching over the years and the best practices boil down three things. Make sure the server is sending RFC compliant caching headers. Send long expires headers for static assets. Use version numbers in asset paths so that we can precisely control expiration.

-

20 Million Hits a Day With WordPress For Free

As the price of cloud computing continues to tumble we can do what was previously unthinkable. It wasn’t long ago that getting WordPress to scale to 10 million hits a day for $15 a month was front page hacker news material; but we can do even better.